Today is Safer Internet Day, and to mark the occasion we want to help you stay protected, empowered, and respected online.

With artificial intelligence (AI) as the hot topic, our cyber team is sharing the must-know information and tips around this rapidly advancing technology. AI is raising heads, with key discussions based around the ethics, law and regulations surrounding it, plus its use in criminality. So, what is AI and how can you remain aware of its associated dangers?

Our cyber team share the must-know information and tips around AI. (AI-generated image)

AI relates to the idea that machines can intelligently execute tasks by mimicking human behaviours and thought processes.

Humans:

Have cognition, influenced by past experiences and emotions,

Can empathise, be creative and use intuition,

Understand the wider context of situations and information and use reason to adapt through learning.

AI:

Can process and analyse vast amounts of complex data unlike many human minds, based on statistical patterns and predefined rules,

Lacks emotions, intuition, creativity, and true understanding of context,

Requires explicit programming to learn and adapt,

Cannot reason ethically or make moral judgments.

Machine learning (ML) is the way we "teach" a computer to make predictions and draw conclusions from data. An algorithm* is trained to read data, to identify patterns and make predictions.

Risk: ML bias or algorithm bias exists and if left unchecked can skew output. For example, in healthcare, where underrepresented data of women or minority groups can skew predictive AI algorithms.

*What’s an algorithm? It’s a process or set of rules to be followed in calculations or problem-solving operations, especially by a computer. You could think of it as a set of instructions that need to be carried out in a specific order to perform a particular task (like tying your shoelace).

Deep learning (DL) is a subset of ML, where neuro networks are trained to learn from data and read complex patterns. This is suited to image work and natural language processing (see below). The difference between ML and DL is the complexity of the algorithms.

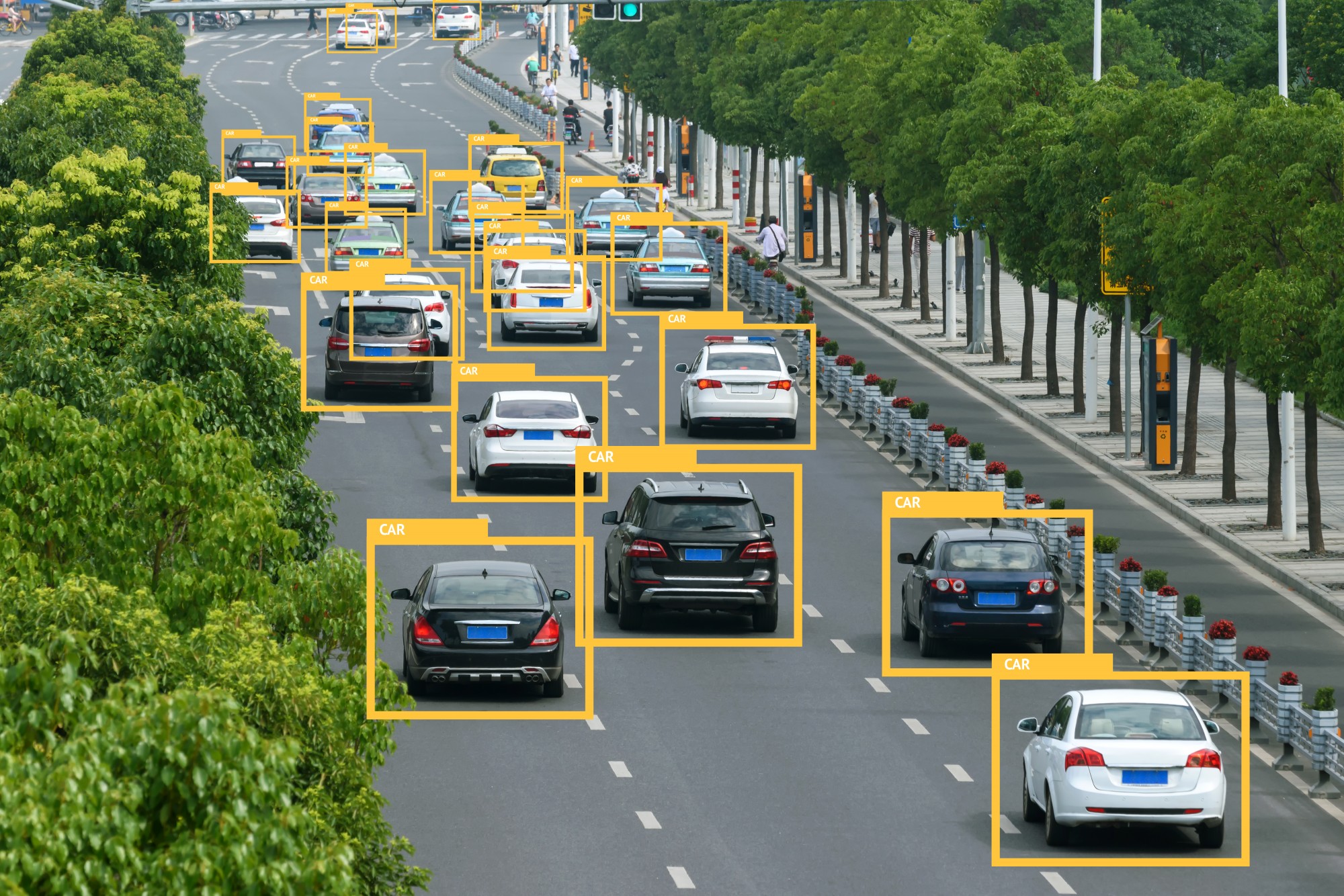

Computer Vision - Capabilities within AI to interpret the world visually through cameras, video, and image. Azure AI vision is a good example of this, allowing the blind and visually impaired to harness the power of AI to describe nearby people, text, and objects.

Natural language processing (NLP) - Capabilities within AI for a computer to interpret written or spoken language and respond in kind.

ChatGPT is an NLP algorithm that understands and generates natural language autonomously. Chat GPT 4 (released Mar 23) is said to be 10 times more intelligent than Chat GPT 3.5 (released Nov 22) even though they were released months apart!

“We have trained a model called ChatGPT which interacts in a conversational way. The dialogue format makes it possible for ChatGPT to answer follow up questions, admit its mistakes, challenge incorrect premises, and reject inappropriate requests” (Open AI).

Document intelligence - Capabilities within AI that deal with managing, processing, and using high volumes of data found in forms and documents. You can automate applications and workflows using document intelligence. Document Intelligence can be used for automating the productions of contracts, medical, health and financial forms.

Knowledge mining - Capabilities within AI to extract information from large volumes of often unstructured data to create a searchable knowledge store. One example of a knowledge mining solution is ‘Azure Cognitive Search’ by Microsoft.

Generative AI - Capabilities within AI that create original content in a variety of formats including natural language, image, code, and more. ChatGPT is generative AI, it takes in natural language input and returns an appropriate response in a variety of formats.

AI is being used to improve safety while driving thanks to object detection and depth estimation.

AI technology is transforming the way we operate online, we’ve listed a few examples of its positive uses around the world:

In translation – For example, Google Translate is being used to translate more than 100 billion words every day quickly and easily. Netflix uses AI powered translation, including voiceover and subtitles. The author Jeffrey Archer also saw his book produced in 47 languages after one read-through in English.

In healthcare – The NHS is using it to half treatment times for stroke patients and to provide a quicker diagnosis for lung cancer patients. Consultants may use AI to assist their interpretations thanks to its ability to potentially spot something or recognise a pattern that a human may not see.

In the automotive industry – AI can be used for accident prevention, such as Tesla installing an AI-powered interior camera above the rear-view mirror to monitor driver eye movement and detecting drowsiness. The car also performs object detection and depth estimation. (Source Forbes)

Read more about the Automated Vehicles Bill UK 2023.

In entertainment - Netflix collects data on its subscribers’ viewing habits and uses machine learning algorithms to understand their tastes and preferences, enabling highly personalised content recommendations (similarly done by Spotify with music playlists) - (quidgest.com).

In finance – AI is used in the detection of fraud, identifying and flagging transactions that don’t fit normal patterns.

Hyper-realistic digital forgeries known as deepfakes are blurring the lines between fact and fiction.

AI can be used to generate fake images and content and all it needs is data which can often be gleaned from social media. Deepfakes can be humorous, they can also be harmful. They can be used to create fake news, disinformation, slander, and criminality, in the form of image, video or audio format.

The better the quality and quantity of data, the better the product to create deepfakes. This technology is readily available on the internet.

AI can be used to generate fake images and content and all it needs is data which can often be gleaned from social media. (AI-generated image)

Recent examples in the media range from an entire TikTok account dedicated to deepfakes of Tom Cruise; to cyber criminals using voice-cloning technology to deceive a bank manager in the United Arab Emirates into moving $35 million to their account. Digital manipulation can even be used to create fake voice calls or voicemails in cases of kidnap and ransom.

The UK are also specifically targeting AI in relation to the most common deepfakes on the Internet; non-consensual sexual depictions of women, often in compromising or violent situations. This form of harassment is particularly potent for women in the public eye, whose photos are widely available online. (Politico)

Just last week the headlines read: “Fakers gonna fake, fake, fake, fake, fake ... time to fake it off” as non-consensual explicit AI deepfakes of Taylor Swift hit X, raising concerns once again as to why there is no legislation to prevent this. (The register)

It's becoming increasingly hard to detect a deepfake. AI can help, but as the AI improves to detect this, so too, does its ability to make deepfakes more sophisticated and therefore increasingly undetectable.

Lawmakers and law enforcers are worried as even video evidence is being brought into question as being unreliable. A EuroPol report (worth a read): ‘Law enforcement and the challenge of deepfakes’ says that experts estimate that as much as 90% of online content could be synthetically generated by 2026.

Defined ethical and legal standards are all playing catch up to the technology.

AI and humans are treated differently under law – AI has no rights or duties under law; it is classed as property. So, who is responsible for the harm or damage caused?

Should people be held accountable? Designers, developers, owners, coders… all hold some responsibility. Clearly, defined ethical and legal standards are all playing catch up to the technology.

The National AI Strategy was published in December 2022 to outline the government’s commitment to develop a pro-innovation national position on governing and regulating AI.

The General Data Protection Regulation (GDPR) and the Data Protection Act 2018 (DPA 2018) regulate the collection and use of personal data. Where AI uses personal data, it falls within the scope of this legislation. This can be using personal data to train, test or deploy an AI system. There is a section on AI in the GDPR here from the Information Commissioners Officer (ICO).

The Online Safety Act 2023 is a bill to make provision for, and in connection with, the regulation by OFCOM of certain internet services; for and in connection with communications offences; and for connected purposes (bills.parliament.uk)

The National Crime Agency (NCA) has published useful supporting documentation for children and parents through its ‘Cyber Choices’ programme, covering the law and consequences of breaking them.

Microsoft has six guiding principles: fairness, reliability, privacy and security, inclusiveness, transparency, and accountability. These are similar to those released by the National Institute for Standards in Technology (NIST) in the US, in its AI Risk Management Framework: prepare, categorise, select, implement, assess, authorise, monitor.

The European Union has produced a draft AI Act (EU – AI Act (Draft) 2021), aiming to regulate the development of AI.

Worth considering is that if the producer/manufacturer was absolutely free of accountability, there might be no incentive to provide a good product or service and it could damage people’s trust in the technology; but regulations could also be too strict and stifle innovation (European Parliament).

The aim of AI instruments varies across nations, but shared themes like transparency, accountability, and privacy are common, hinting at the potential for collaborative governance in the future.

Don’t forget Alexa and Siri are AI (conversational AI)!

Be cautious when sharing personal data: Avoid providing excessive personal information to AI-powered platforms and understand how your data is used and stored.

Verify AI-generated content: Double-check information generated by AI models, as inaccuracies can occur. Don’t solely rely on AI for critical decisions.

Understand AI biases: Be aware that AI models can inherit biases from their training data. Stay informed and question AI-generated results that seem unfair or discriminatory.

Keep AI systems updated: Ensure that the AI applications you use are regularly updated to benefit from security patches and improvements. Outdated systems may be more vulnerable to exploitation.

Read and understand privacy policies: Before using AI-driven services, familiarise yourself with the privacy policies to know how your data is handles, stored, and shared. Make informed decisions based on this information.